Ranking your website at the top of Google…

Let’s face it… That’s what you want. That’s what every business wants — to appear in front of qualified customers.

Yet most people optimize content for Google instead of human eyeballs. They often sacrifice readability to add more keywords. Or make an article unnecessarily long.

Herein lies the issue — poor readability will now affect your search rankings on Google. If it hasn’t before, it will now.

And, the way to go is to optimize content for human readability, rather than search engine bots.

Table of Contents

Problem: How did SEO efforts affect readability?

In the beginning, Google relied on a few factors to rank web pages.

The two prominent ones were keyword density (the number of keywords on a web page) and backlinks from external websites.

This prompted website owners to manipulate keyword density and buy backlinks. As a result, irrelevant and rubbish content often made it to the top of the search engine results page (SERP), while legitimate results got pushed to the bottom.

Over the years, Google has had to continually update its algorithm to combat low-quality shit in its SERP and black hat SEO.

Google’s purpose and its early limitations

Here’s the thing… Google exists to answer search queries.

From the beginning, its goal was to match queries (from actual humans) to the most relevant and complete results. Google wanted content written for actual people, not search engines.

Yet its search algorithm wasn’t good enough to identify genuinely relevant and useful web pages, much less rank them higher. The gap between technology and search behaviour created an opportunity — in the form of SEO — to get ahead of the competition. And, this often came at the cost of readability.

But now, FINALLY, the search giant’s algorithm has caught up.

It now rewards content meant to be read by human eyeballs (and not the content optimized for static ranking factors). Human and SEO readability has converged!

Importance of human-readable content

Readability is a gauge of how easy it is to read and understand a written text.

It factors in both content complexity and presentation.

Content complexity looks at how you express ideas (i.e. vocabulary, sentence structure, and more), which may take an audience of a certain education level to understand.

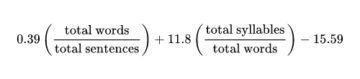

Readability tests like Flesch and ARI (Automated Readability Index) are popular ways to directly calculate complexity. But, they rely on oversimplified formulas that give a flawed assessment of complexity. Complexity is often based on the average number of words in a sentence, syllables in the average word, and so on.

Presentation looks at whether you’ve formatted text (i.e. typography) in a way that complements reading behaviour, which helps prevent eye fatigue.

In short, the lesser time it takes people to read your content, the better your readability.

This is of crucial importance for online content.

Why does online content need to be even more readable?

Consider this…

The average person doesn’t read an entire article after getting to it from the search engine results page (SERP).

Instead, they scan the content to:

- Get a sense of what it’s about (and check if it’s relevant)

- Estimate the time needed to read it (and decide whether to bookmark it for later)

- Skip to read sections that answer their immediate needs

Remember, people want content that’s relevant to them. They focus on their most pressing needs first.

At the same time, they lack the time and attention span to read. Most of them are using smartphones to consume content; not desktop. Plus, most people read their best at a third-grade level, while half of that number read at an eighth-grade level (easy enough for 13 to 14-year-olds).

That’s why online content has to be written and formatted in a way that’s scannable and easy-to-read. The text has to be presented in a way that reduces eye fatigue.

Now, what has changed with Google? Why is readability important from an SEO-perspective?

The current state of Google’s algorithm — Hummingbird

Right now, Google uses the Hummingbird as its overall algorithm.

And it has confirmed that content is its second top ranking factor; RankBrain (Google’s Artificial Intelligence that uses machine learning to generate results dynamically) comes in third.

These two top ranking factors indirectly require your web page to be:

- relevant to a search query

- high in quality (and holistic)

- easy-to-read

If not, forget about getting to the top of the SERP.

Long gone are the days of optimizing content for static factors (especially with machine learning and dynamic processing of queries). Time to optimize content for humans.

Further, the rise in smartphone use and voice searches emphasises the importance of easy-to-read content. People ignore web pages without quick-to-understand and yet comprehensive answers.

Also, can you imagine Google allowing its Google Assistant to sound out awkwardly-written articles? Not likely to happen.

Need actual data on readability (for actual people) impacting search rankings? These points prove that human readability and SEO readability have converged…

7 reasons why readability is important as a ranking factor (backed by real data)

How do we even know if Google measures readability?

We don’t know for sure. Google has always kept details of its ranking factors secret (to prevent those pesky spammers from gaming the system).

But this is what we know for sure… it rewards readability.

#1 Easy-to-read pages are correlated with higher search rankings

Search Metrics reported in 2015 that the top ten search results were “less demanding to read”. It found a positive correlation between Google SERP rankings and a high Flesch readability score.

While the Flesch formula is an oversimplified measure, it’s still a popular method to gauge readability directly. Even the Yoast SEO WordPress plugin uses it.

#2 Respun, duplicate, and shallow web pages are penalised

Before 2011, plagiarised content that covered a topic in shallow depth began ranking on Google. The content rarely answered a person’s search queries and made little sense.

These search results were due to spammers respinning copied content with an automated rewriting app. The result was content full of awkward phrases and unnatural-sounding synonyms.

As this affected the readability and quality of search results, Google responded with the Panda algorithm update in February 2011. It prevented sites with low quality and shallow content, or duplicate content, from rising to the top.

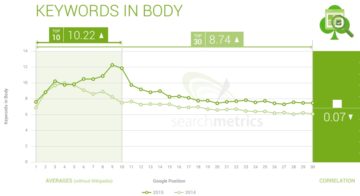

#3 Keyword density has lost its importance (and overuse is penalised)

In 2015, Search Metrics confirmed that the top five results had far lower keyword densities than the rest of the search results. Further, the correlation between keyword density (the number of keywords within a content) and SERP rankings have dropped significantly over the years.

But wait… what do keywords have to do with readability?

When keyword density was a decisive ranking factor, spammers and many marketers engaged in keyword stuffing to get their web pages to the top. They’d deliberately use a keyword repetitively until their content became awkward and hard-to-read.

The Penguin update

And Google responded with the Penguin algorithm update in April 2012. It penalised web pages for keyword stuffing. This was an early move towards human-readable content.

Even then, some keywords still make for awkward reading, especially when optimising for longtail ones.

For example, imagine using “what is SEO and how it works” multiple times in one article. It’d sound unnatural, wouldn’t it?

This is why, keyword density alone, wasn’t a good factor to gauge content relevance. It was causing issues in readability.

#4 Content relevance (proof terms + relevant terms) is rising in importance

The top six search results scored higher on content relevance than results lower on the SERP in 2016. This suggests that Google is now able to identify relevant content and reward it.

Now, instead of taking keywords at face value, Google also determines a person’s search intent (and the contextual meaning of a query) before serving results.

This is called semantic search, which the Hummingbird and RankBrain algorithm uses. Semantic search allows Google to serve more relevant content by assessing intent and context.

But, with lesser emphasis on keywords, how does Google identify genuinely relevant search results?

Cue… proof terms and relevant terms!

Proof terms + Relevant terms

Both proof terms and relevant terms, help Google identify content that’s detailed in scope, genuinely useful, and relevant to search intent.

They also make an article less ambiguous and, hence, easier to understand. This eliminates the need for repetitive overuse of keywords that make an article hard-to-read.

Also, both terms are correlated to SERP rankings. And the top 30 search results have a higher percentage of proof terms and relevant terms.

So… what are proof terms and relevant terms!?

Proof terms: Words and phrases that you can’t help but use when genuinely discussing a specific topic. They prove to Google that your content is genuine; not keyword spam.

Example — when writing about bitcoins, some proof terms you’d use are “bitcoins”, “blockchain”, “decentralised transactions”, “cryptocurrency” and “payment network”.

Relevant terms: Terms that you use when you are covering a topic in detail (holistically), as opposed to having a shallow focus. People prefer a resource to answer their query and any follow-up questions they may have.

Example — when writing about bitcoins, relevant terms include, “Ethereum”, “crypto trading”, “mining”, “ICO”, “Litecoin”, and more.

#5 Dwell time has an impact on rankings

In 2016, the average time on site for the top 10 positions on the SERP is 190 seconds, while the top 20 drops to 168 seconds.

This is a sign of dwell time — the length of time a person stays on a search result (web page). It tells Google how relevant a search result is, and how well it answers a search query.

If people bounce off a search result too quickly (and return to Google), the content failed to answer their query; Google demotes the web page ranking in response.

On the other hand, if people stay on a search result for several minutes (and don’t return to Google), the content is relevant and answers the query holistically. Holistic content anticipates other questions a person might have and answers them in detail.

Good readability is also a huge factor. Content that is easy-to-read and scannable catches a person’s eye immediately. It readily answers a person’s query without unnecessary complexity.

If people find it hard to understand or find information (not scannable) in your content, they return to Google. And that’s not a good thing.

#6 Social signals are correlated with rankings

Social signals are the number of followers and engagements a website has from social media. Google has denied that it was using social signals as a direct ranking factor but…

Social signals are consistently and highly correlated with search rankings. Check the reports in 2012, 2015, and 2016. Further, the top result on Google often had twice as many Facebook engagements than the second.

So social signals may have some indirect impact. And widely-shared content on social media all have one thing in common… good readability.

In fact, Scribblrs found that viral articles by Buzzfeed scored highly on three different readability tests. Even top shared content by Huffington Post and CNN rarely exceeded a grade seven reading level — easy enough for a 12 or 13-year-old to read.

#7 Existence of Google’s readability formula (to test content complexity)

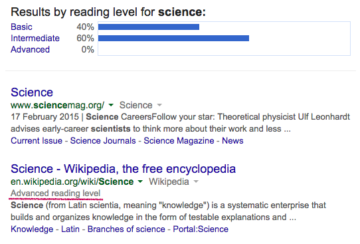

Until May 2015, you could filter search results by reading level — basic, intermediate, and advanced. This proves that Google possesses a readability formula.

What’s more… the discontinued reading level filter didn’t base results on the Flesch formula. Search results filtered to basic reading level had mostly bad Flesch scores — many were hard for children to read. This suggests that the filter used a unique readability formula (or even algorithm).

Yet, we don’t know if Google is using this readability formula for regular searches, beyond the obsolete reading level filter.

Potential of Google’s readability formula

Either way, a formula by Google would be no doubt complex, especially because of RankBrain, and semantic search and machine learning data.

You see… regular readability formulas oversimplify the factors of content complexity. They mostly count average syllables in a word, words in a sentence, and characters in a word/sentence in their calculations.

Google, conversely, is able to connect concepts and interpret natural human language in a way that ordinary readability formulas (like Flesch) can’t. This allows it to gauge content complexity at a far higher level.

Moreover, Google could potentially personalize search results to a specific user’s reading level and technical expertise. For instance, “amortization” is technical jargon for the average person, but the average accountant will understand the term. This isn’t implausible because Google has already been personalizing search results by individual browsing behaviour for some time.

Even so, whether Google is gauging readability directly with a formula (or not) is up for debate. What we know (for sure) are the mentioned indirect ranking factors that rely largely on readability.

That’s why you should optimize content for a targeted human audience. If dynamic machine learning is involved in any process, there is no standard way to optimize for SEO readability.

Bottom line — optimize content for humans; not search engines

Google’s search technology has advanced to a point where it can identify genuine, useful and relevant content. It can also personalize results to unique queries.

While there will always be a gap between technology and actual human search behaviour, it is best to write and optimize content for human eyeballs. This is especially true due to machine learning and dynamically prioritized ranking factors.

Take a look at this article to optimize your content for readability (in particular, point 5, 6 and 7).